由剑桥大学领导的一个由计算机科学家、工程师、数学家和认知科学家组成的团队开发了一个名为CheckMate的开源评估平台,该平台允许人类用户与大型语言模型(llm)进行交互并评估其性能。

研究人员在一项实验中测试了CheckMate,人类参与者使用三种llms (instructgpt, ChatGPT和gpt -4)作为解决本科数学问题的助手。

该团队研究了法学硕士如何帮助参与者解决问题。尽管聊天机器人的正确性与被认为乐于助人之间存在普遍的正相关关系,但研究人员也发现了llm不正确但对参与者仍然有用的情况。然而,某些不正确的LLM输出被参与者认为是正确的。这在针对聊天进行优化的llm中最为明显。

研究人员建议,那些能够传达不确定性、对用户的更正做出良好反应、并能为其建议提供简明理由的模型,是更好的助手。法学硕士的人类用户应该仔细验证他们的输出,考虑到他们目前的缺点。

发表在《美国国家科学院院刊》(Proceedings of The National Academy of Sciences)上的研究结果,可能有助于为人工智能素养培训提供信息,并帮助开发人员改进法学硕士,以实现更广泛的用途。

虽然法学硕士越来越强大,但他们也会犯错误,提供不正确的信息,随着这些系统越来越融入我们的日常生活,这可能会产生负面后果。

“法学硕士已经变得非常受欢迎,以定量的方式评估它们的表现很重要,但我们也需要评估这些系统与人们的合作和支持程度,”剑桥大学计算机科学与技术系的第一作者阿尔伯特·江(Albert Jiang)说。“我们还没有全面的方法来评估法学硕士与人类互动时的表现。”

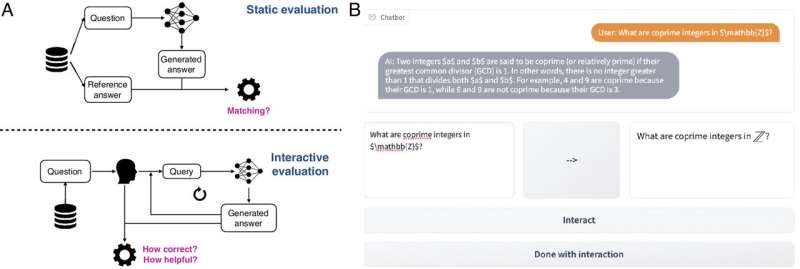

评估llm的标准方法依赖于静态输入和输出对,这忽略了聊天机器人的交互特性,以及它在不同场景中如何改变它们的有用性。研究人员开发了CheckMate来帮助回答这些问题,设计用于但不限于数学应用。

“当与数学家谈论法学硕士时,他们中的许多人都属于两个主要阵营之一:要么他们认为法学硕士可以自己提出复杂的数学证明,要么他们认为法学硕士无法进行简单的算术,”来自工程系的共同第一作者Katie Collins说。“当然,事实可能介于两者之间,但我们希望找到一种方法来评估法学硕士适合哪些任务,不适合哪些任务。”

研究人员招募了25名数学家,从本科生到高级教授,与三个不同的法学硕士(InstructGPT, ChatGPT和GPT-4)进行互动,并使用CheckMate评估他们的表现。参与者在法学硕士的帮助下完成了本科水平的数学定理,并被要求对每个法学硕士的回答的正确性和有用性进行评分。参与者不知道他们正在与哪个法学硕士互动。

研究人员记录了参与者提出的各种问题,参与者在得到完全或部分错误的答案时的反应,他们是否以及如何试图纠正法学硕士,或者他们是否要求澄清。参与者在为法学硕士撰写有效提示方面有不同程度的经验,这经常影响法学硕士提供的回答质量。

An example of an effective prompt is "what is the definition of X" (X being a concept in the problem) as chatbots can be very good at retrieving concepts they know of and explaining it to the user.

"One of the things we found is the surprising fallibility of these models," said Collins. "Sometimes, these LLMs will be really good at higher-level mathematics, and then they'll fail at something far simpler. It shows that it's vital to think carefully about how to use LLMs effectively and appropriately."

However, like the LLMs, the human participants also made mistakes. The researchers asked participants to rate how confident they were in their own ability to solve the problem they were using the LLM for. In cases where the participant was less confident in their own abilities, they were more likely to rate incorrect generations by LLM as correct.

"This kind of gets to a big challenge of evaluating LLMs, because they're getting so good at generating nice, seemingly correct natural language, that it's easy to be fooled by their responses," said Jiang. "It also shows that while human evaluation is useful and important, it's nuanced, and sometimes it's wrong. Anyone using an LLM, for any application, should always pay attention to the output and verify it themselves."

based on the results from CheckMate, the researchers say that newer generations of LLMs are increasingly able to collaborate helpfully and correctly with human users on undergraduate-level math problems, as long as the user can assess the correctness of LLM-generated responses.

Even if the answers may be memorized and can be found somewhere on the internet, LLMs have the advantage of being flexible in their inputs and outputs over traditional search engines (though should not replace search engines in their current form).

While CheckMate was tested on mathematical problems, the researchers say their platform could be adapted to a wide range of fields. In the future, this type of feedback could be incorporated into the LLMs themselves, although none of the CheckMate feedback from the current study has been fed back into the models.

"These kinds of tools can help the research community to have a better understanding of the strengths and weaknesses of these models," said Collins. "We wouldn't use them as tools to solve complex mathematical problems on their own, but they can be useful assistants if the users know how to take advantage of them."

More information: Katherine M. Collins et al, evaluating language models for mathematics through interactions, Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2318124121 Journal information: Proceedings of the National Academy of Sciences

Provided by University of Cambridge

Citation: New open-source platform allows users to evaluate performance of AI-powered chatbots (2024, June 4) retrieved 4 June 2024 from https://techxplore.com/news/2024-06-source-platform-users-ai-powered.html This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.